Spreadsheet Smarts: How Sidekick Outperforms the State of the Art

Tech publications are excited about a new development from research at Microsoft. The technique, SpreadsheetLLM, is described in detail in a newly published research paper. As research papers go, it's on the more accessible side. Pro tip: read the abstract, look at the images, read the bold text and the paragraph following it, and skip the math equations.

First, let's clear up two pieces of misinformation common in the reporting:

-

That it's a new LLM (Large Language Model) - it's not. It is a technique for compressing and rearranging complex and large spreadsheets so that any LLM can understand them.

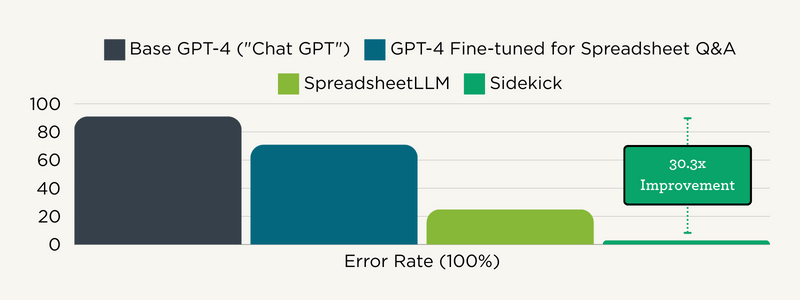

- That it's a huge breakthrough for enterprise productivity. You have to dig into the performance numbers to dispel this, though. As a baseline, GPT-4, the best-performing model in their benchmarks, can answer questions about large, non-trivial spreadsheets 9% of the time... When fine-tuned on spreadsheets, it can get up to 29%. When you apply their new technique, it reaches... 75%

Don't get me wrong, 9% to 75% is a big leap. This is a powerful technique and great research from a leader in the field. Conversely, some of our partners and software vendors hesitate to adopt AI features. They think that all AI technologies are becoming "commoditized." 9% performance from the commercially available version doesn't *sound* commoditized.

Consider this: how much more valuable is a system that answers correctly 95% of the time compared to one at 75%? I'd argue it's five times more valuable. The error rate is reduced from 25% of the time to 5%. Remember those numbers and value comparison; we'll return to them.

Let's talk about Sidekick.

We're in the late stages of a beta with our first agentic AI suite of features, Sidekick. Sidekick is the ideal assistant for managing community-scale data. It uses tools integrated with our data catalog, allowing you to easily find, analyze, visualize, and communicate data through natural language commands. We've got a high-level overview of the data available to Sidekick and a detailed catalog. You can also read more about how we put AI to work for good. In the clip below, one of our early Sidekick users shows how this data, AI, and doing good in the world converge.

Dr Sarah Story, Director of Public Health, Jefferson, CO talks about using Sidekick to quickly understand and communicate about her County

Our library contains over 5 billion data points sourced from over 50 sources and nearly 1,000 tables and formats. No LLM on the market can even read that data set at once, let alone accurately answer questions and analyze non-trivial chunks.

It's all about evaluation.

We're rigorously evaluating and enhancing Sidekick's performance across multiple areas. This includes a comprehensive suite of automated tests. They end up like those tasks tested in SpreadsheetLLM—understanding and responding to queries about large data sets. Until you've tested your AI system at scale, you have to assume any time it worked was a coincidence. So, how faithful is Sidekick to the data in our library when answering questions? 97%

Direct excerpt from a summary of our most recent Sidekick Evals Baseline; FAITH is the metric for Faithfulness to the input data, and 0.971 = 97.1%; it is the faithfulness metric from Ragas, an LLM evaluation framework

Direct excerpt from a summary of our most recent Sidekick Evals Baseline; FAITH is the metric for Faithfulness to the input data, and 0.971 = 97.1%; it is the faithfulness metric from Ragas, an LLM evaluation framework

We run various test suites to assess different aspects of performance. We look at data search, geographic search, availability, Q&A accuracy, and analytical workflows. That 97% number is the faithfulness score on multiple runs of the questions and answers suite and is a lower bound - for speed and cost reasons, we use a separate AI system to judge the answers, and it can undercount correct answers a little (better safe than sorry?). Not only is it a lower bound, but those suites are made up of harder questions than the average, everyday use case. We want a discriminating test, after all. We also frequently add samples for questions that previously caused a problem to ensure they don't sneak back in.

A simple comparison.

We love research like SpreadsheetLLM. Not only does it show how specialized human skill and innovation can boost commodity LLM services to exciting performance levels, but the deliberate way it measures and describes baselines and improvements invites easy comparison. In this case, we're extremely happy with how Sidekick compares to the new state of the art in spreadsheet table understanding. 97% faithfulness to our data is over 5x as valuable as 75% faithfulness the SpreadsheetLLM team achieved.

Here, the researchers focused on 64 spreadsheets containing 1-4 tables ranging from <4,000 tokens to >32,000 tokens. Our dataset includes information from over 50 sources and nearly 1,000 tables. Processing this with an LLM takes about 249 billion tokens, with the average numeric value using just under five tokens each. Both approaches involve substantial pre-processing of the data for consumption by an LLM.

So what does it all mean?

Ultimately, we can draw a clear conclusion from these comparisons: Sidekick processes a data catalog much larger than SpreadsheetLLM's, and errors are much less frequent. At mySidewalk, we know that for our customers and users, the effectiveness of any tool lies not just in its technical capabilities but also in its ability to earn trust. Sidekick's performance is an admirable example of how we bring these ideals to life at mySidewalk.

AI models and products from major labs are impressive - but small expert teams can still squeeze out big performance gains. We've seen this concretely thanks to the SpreadsheetLLM research.

Share this

You May Also Like

These Related Stories

No Comments Yet

Let us know what you think